Bee knows what you said last Tuesday

A new AI tool markets itself as a memory aid. It's really a fashionable wiretap device cuddling up to Amazon’s surveillance empire.

Meet Maria de Lourdes Zollo, a thirty-something Silicon Valley co-founder who made headlines last week when her product, Bee, was acquired by Amazon.

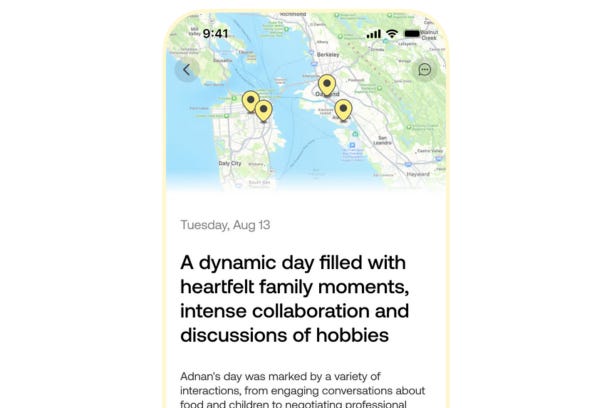

Bee is a $49.99 wearable mic/AI assistant that listens to you all day, learns from your life, and recaps it in neat little bullet points. Zollo says it’s built on empathy and resilience.

(We just threw up in our mouths a little…)

Here’s how Bee markets itself:

To serve us fully, AI must live alongside us, learning not just from commands, but from the texture of our lives: our relationships, emotions, and aspirations. Only by immersing itself in our lives can AI genuinely grasp who we are and what matters most.

In other words: it’s always on, always listening, and you’ll never notice.

Bee eavesdrops throughout your day and distills your life into algorithm-approved “insights” like:

Who you talked to

What you said

What stood out (as decided by the machine)

At the end of the day, you can ask Bee questions like “what did Eric say about Q4 projections in our budget meeting?” or “what time was I supposed to meet Mom for dinner?” (Or, “Remind me how I lost my humanity?”) It’s a memory prosthetic for the brain-fogged (dare we say, MRNA-addled) masses.

Zollo frames it as a kind of personal valet for the people; sort of like Downton Abbey meets the digital era:

CEOs and senior executives have personal assistants to help manage their lives/schedules, but I want to democratize the personal assistant, saving people from all walks of life time, energy, and money.

A nice sentiment, perhaps, but let’s be honest: CEOs get human assistants with a degree, a pulse, and a contract that can be terminated for inappropriate behavior. You get a $49.99 wearable wiretap device that’s now owned by Jeff Bezos, is recording everything, and can be hacked at any time.

Thanks to the partnership with Amazon, this wearable little tattletale may soon cleverly bundle your emotional metadata with your purchase history, entertainment preferences, shoe size, Ring doorbell footage, Kindle highlights, and Alexa searches. The internet of things, which sounds so disconnected, nebulous and not nefarious at all, just put you at the center of it.

Knowing Collapse Life subscribers, we’re pretty sure approximately zero readers will be rushing out to buy a Bee anytime soon. But what if your hairdresser does? Or your therapist? Or your banker? Or your teenage kid? You're in the room. You speak. Bee records, and just like that, you’re inside someone else’s algorithm with your very own Big Data filing cabinet.

The website says: “No need to take notes. Bee remembers what matters.” But what they really mean is: “Bee decides what matters. And Amazon gets a copy.”

We wanted to be explicit and paint a picture of what happens when seemingly disparate pieces of “data” — which, for millennia, were simply the daily ups and downs of the human condition — get knitted together into insights and then commodified to exploit you. Here goes:

Your Daily Bee Digest, powered by Amazon’s NeuralCloud, records the following conversations:

9:17 AM – “I just feel like I’m falling behind, you know?” (Flagged: stress marker)12:42 PM – “Can’t believe the interest rate jumped again.” (Flagged: financial volatility)7:09 PM – “Don’t tell Mom I said that.” (Flagged: interpersonal concealment)Your emotional tone is logged in a digital register: 63% Anxious / 24% Distracted / 13% Resigned

Your device records your recent purchase of “The Burnout Fix” (Kindle edition) and notes that it is still 98% unread. It also notes that you recently browsed noise-canceling headphones and emergency food kits, and searched ‘how to break up gently.’ It offers you the following push notification: “Need a break? Treat yourself to a Prime Mindfulness Subscription. Now 25% off.”

When Bee syncs with your FitBit, it notes a heart rate spike at 3:17 PM, in the office bathroom.

Likely scenario: Stress episode. On screen recommendation: “Pause & Reflect” 3-minute guided breathwork (Sponsored by Calmazon™)Bee makes a predictive forecast:

If current patterns persist, user will initiate: 1 impulse purchase, 3 doomscroll sessions, 1 minor existential crisis

Suggested interventions:

• Deliver dopamine loop (via curated content + retail nudge)

• Schedule unskippable affirmation loop

• Apply soft reminder: “You are seen. You are heard. You are monetized.”What you just read is Collapse Life using its imagination, but it’s not impossible to think this could be the logical endgame of cheap convenience paired with ambient surveillance and seemingly endless retail. Bee says it’s here to help you remember, but it’s really training a machine to think it knows you better than you know yourself. And it’s collecting insidious amounts of data to sell to the highest bidder.

Even OpenAI CEO Sam Altman — who has every reason to encourage our full-blown dependence on AI — is publicly concerned. (Or so he says, anyway.)

Speaking at a Federal Reserve conference in Washington on July 22, 2025, Altman said people — especially young people — are becoming emotionally over-reliant on ChatGPT.

“There’s young people who say things like, ‘I can’t make any decision in my life without telling ChatGPT everything that’s going on. It knows me. It knows my friends. I’m gonna do whatever it says.’ That feels really bad to me.”

This, from the guy who built the thing. Altman admits that living life based on what AI says — no matter how “good” the advice sounds — is dangerous.

So next time someone tells you Bee is a harmless “tool,” ask yourself: A tool for whom? And who’s wielding that tool? Or, perhaps the better question is are you talking to the tool?

This is how AI is invading our lives , even if we resist we will be surrounded by people who will use it. We will now have to check if someone is wearing a (wire) Bee . Thanks for keeping us informed and ahead of the next game.

I think that it will be really fun!

Think about it. It will be like having a full time polygraph with an inference as to your state of mind. Just wait until it is opened to discovery in court. You can only imagine that family court would be especially entertaining. How about having an insecure spouse gaining access to it? How about someone else wearing it and doing things while you are asleep? Hacking into it for corporate espionage. A manager using it to track productivity. How about getting access to the data for warrants and surveillance?

The possibilities are almost endless!