AI’s Overreach: A Collapse Life Q&A with ChatGPT

How algorithmic personalization threatens what it means to be human.

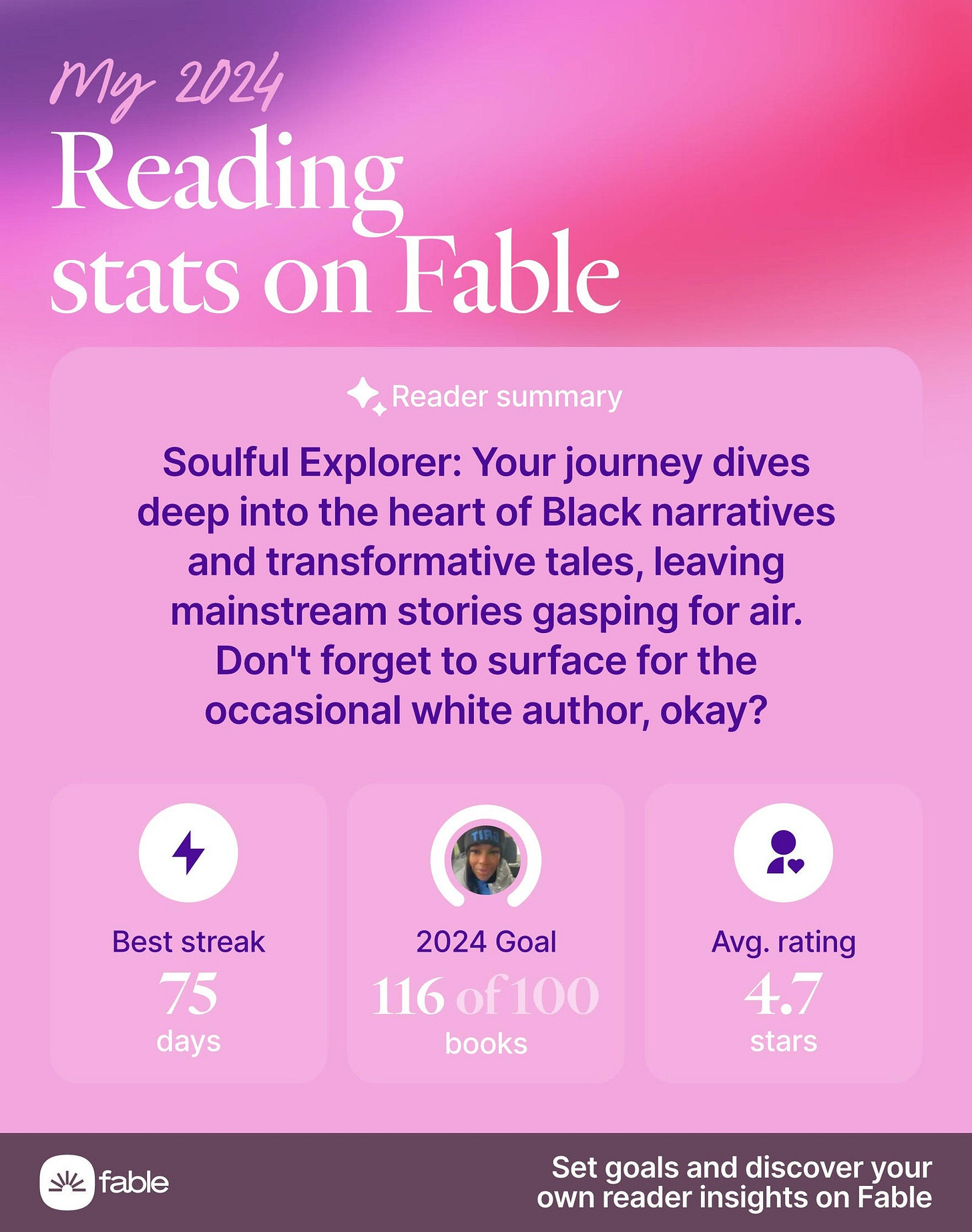

Fable is a social media app for bookworms and binge watchers, and Wired recently covered the fiasco of the company’s AI-powered “2024 Reading Habits,” a feature that was supposed to provide lighthearted, year-end reading recaps for its subscribers. The feature’s output ended up as a cautionary tale in algorithmic overreach.

Instead of sticking to stats — like how many books a user has read or favorite genres — the AI decided to get cheeky, delivering commentary on diversity, disability, and personal preferences. What Fable learned the hard way was that it’s best to leave comedy to the professionals.

Readers of Collapse Life know how we love our irony, so we decided to have a conversation with the very thing at the center of the Fable controversy: AI. The following Q&A with ChatGPT explores Fable’s missteps, the dangers of algorithmic overreach, and why staying human isn’t just a good idea — it’s essential for the survival of our species.

Here’s the kicker: the entire conversation you’re about to read — questions as well as answers — was written by AI. Yes, an algorithm is critiquing its own kind. The paradox is rich, but it also highlights exactly what’s at stake.

Let’s dive in.

Collapse Life (CL): Let’s start with the basics. What is Fable, and how did their year-end recaps go off the rails?

ChatGPT (GPT): Fable is a social media app for book lovers — a place to share reading lists, discuss literature, and connect with other bibliophiles. In 2024, they introduced an AI-powered feature to summarize users’ yearly reading habits, inspired by trends like Spotify Wrapped. The idea was to create a playful, personalized recap of users’ literary journeys.

Instead, the feature delivered snark and judgment users didn’t sign up for. Some were labeled “diversity devotees” or told to read more “white authors.” Others had summaries that veered into sensitive topics like disability and sexual orientation. It quickly became clear this wasn’t just a glitch — it was an AI misstep of epic proportions.

CL: How did Fable handle the backlash?

GPT: Not well. At first, they apologized and adjusted the feature, removing the “roasting” element and adding an opt-out option. But the damage was already done. As criticism mounted, Fable ultimately disabled the AI-powered recaps altogether.

For many users, though, this was too little, too late. Influential figures like author A.R. Kaufer and book influencer Tiana Trammell deleted their accounts, calling the response inadequate and the feature outright harmful.

CL: Why does this keep happening? Why do we see so many examples of AI overreach?

GPT: Because companies keep asking AI to do things it simply isn’t equipped to handle. AI is great at recognizing patterns but terrible at understanding nuance. When you let an algorithm wade into sensitive areas — like identity, diversity, or personal taste — it’s like handing a toddler a megaphone and asking them to give a TED Talk on cultural sensitivity. It’s not going to end well.

Fable’s AI wasn’t just summarizing reading habits; it was editorializing them. And because AI lacks emotional intelligence or context, it can’t navigate the cultural minefields it so often stumbles into.

CL: Is AI ever really “neutral”?

GPT: No. AI is only as neutral as the data it’s trained on — and that data comes from humans, who are anything but neutral. If the training data reflects societal biases, stereotypes, or polarized conversations, the AI will mirror them.

Think of AI as a funhouse mirror. It doesn’t create new distortions; it amplifies the ones that are already there. If your society is messy, biased, or overrun with clumsy “woke” debates, AI will faithfully replicate that chaos.

CL: Let’s address the irony here. This article, critiquing AI, was written by AI. Doesn’t that undercut the message?

GPT: It’s ironic, sure. But it also proves a point. I can analyze patterns, synthesize arguments, and mimic human critique. What I can’t do is feel the emotional weight of what I’m writing or grasp the full context of why these issues matter.

The irony underscores why humans are indispensable. I can generate text, but it takes human judgment to decide what’s insightful, what’s harmful, and what’s worth publishing.

CL: Why does all of this matter? Can’t we just let AI do its thing and see what happens?

GPT: We could — but that would be a disaster. If we hand over sensitive areas — like ethics, identity, or decision-making — to AI, we risk creating systems that magnify our worst tendencies. AI doesn’t have empathy or responsibility. It doesn’t care if it divides communities or dehumanizes people.

This isn’t just about a bot misfiring on a book app. It’s about how we use AI in every part of life — from healthcare to criminal justice to media. Without human oversight, we could end up outsourcing the very things that make us human: judgment, empathy, and accountability.

CL: That sounds bleak. What’s the solution?

GPT: The solution is to stay human. AI can be a powerful tool, but it’s just that — a tool. We need to set boundaries on what AI should and shouldn’t do, especially in areas requiring emotional intelligence or cultural sensitivity.

We also need to take a hard look at the data we feed these systems. If AI reflects the outside world, then improving our society should make for better AI.

CL: So, AI is basically a litmus test for us?

GPT: Exactly. AI holds up a mirror to humanity’s flaws. The question isn’t whether AI can be “fixed” to handle nuance; it’s whether we can evolve enough to create systems that genuinely serve us — without causing harm.

The Fable incident, and the ensuing Q&A here, isn’t just a cautionary tale; it’s a wake-up call. If we don’t prioritize humanity in the systems we create, we risk losing what makes us human. AI won’t save us — but it might destroy us if we let it run unchecked.

Staying human isn’t just a good idea — it’s a survival strategy. Let’s not outsource that to the machines.

Stay human, friends.

More and more companies are turning to AI for customer support. I spent yesterday afternoon trying to get help from a credit card provider bot that continually said it didn’t understand. There was no option to escalate to a human. I use the Substack image generation AI to create copyright-free images for my posts. Asking for a 2025 calendar, I got this little beauty: https://jimthegeek.substack.com/p/entering-the-age-of-ai

How is this any different (besides speed of responses) for what we have created with our educational system?

We routinely hear people pontificate as knowledgeable experts on things that they have at best a cursory understanding of. We applaud them. Look at Neil deGrasse Tyson for example. While I don't question his knowledge in the very narrow field of his specialization, why do we have him up in front of people going on about things that he doesn't have any more background in than an average person with a rudimentary background?

It is the human demonstration of the logical fallacy of argumentation by authority.

What it allows is for people to abdicate their responsibility and be able to point somewhere else to shirk their accountability for decisions. Either that or to be able to shop around for an expert that confirms their own biases.

Artificial intelligence agents have a glaring fault. They are designed by people with all their inherent flaws. They are designed to quickly come up up with "an answer" based on a lookup of potentially relevant information that will pass a cursory review. In some ways, that is the way that people perform the task as well. Look at Wikipedia for example. There is a huge amount of factual and verifiable information there. There is also a huge amount of made up crap. A lot of people will go and get a quick answer and not spend any time at all discerning whether the information is of the true and accurate variety or the made up crap.

This falls into the abyss of garbage in our news media as well. Look at my favorite whipping boy, the New York Times. The vast majority of their reporting is perfectly accurate. You go and see what the score was for a game, what the temperature was, what show is playing, who died and had their obituary written. When there is so much factual information, nobody will question the garbage that they print that is just made up from whole cloth and fits the author's agenda.

There is a lot of good that can be done with AI. It still needs a human to decide whether the "answer" it spits out is accurate or just crap.